This short example will help you experiment with mining Twitter data

and text processing.

The

first step is to install the necessary packages used in this

example. These packages are necessary for this example project:

[for

Twitter mining] twitteR

[for text mining and analysis] dplyr, tidytext

[for data visualization] ggplot2, wordcloud

[for text mining and analysis] dplyr, tidytext

[for data visualization] ggplot2, wordcloud

Once you have all of the necessary packages, this web resource is a good place to start learning about the twitteR package and Twitter user credentials is here: https://www.r-bloggers.com/setting-up-the-twitter-r-package-for-text-analytics/ You will need your Twitter user credentials to connect to Twitter and download tweet data.

This R script will help run an initial experiment to mine Twitter content and run text analysis on your data using the search term [my professor just]:

Now,

we can step through a brief description of the example R script

above.

The

first set of commands load the twitteR package,

assign your user credentials to objects [paste your user credentials

between the quotation marks], and opens a Twitter access session

with setup_twitter_oauth( ). The example code now

stores the text string [phrase] to search for in an object. The

string "my professor just" is simply an example, you can use any

other string or hashtag you want. The function searchTwitter(

) will request your search from Twitter and return the

results that are stored in an object. The n = 3000

parameter limits the search to the first 3000 tweets that contain

the search terms [the entire phrase or any single word or

combination of words]. Another example of the searchTwitter( )

command is shown as a comment. This example shows you how to

designate a time window with since/until dates.

Notice that the date format used is YYYY-MM-DD.

The search and download may take a while. The object containing the

downloaded tweets is converted to a data frame so it is easier to

work with.

The

dim( ) function verifies the number of rows and

columns in the data frame.The code then saves the Twitter data as

both a .csv and an RData file.

You can edit this section based on your data storage preferences.

The

next section of code will process the tweets. Before beginning, we

will load the necessary packages [dplyr, tidytext,

ggplot2, and wordcloud].

Before

beginning the analysis you can view

the first few tweets with the head( )

function. This example will analyze several of the tweet

attributes: created [the creation datetime of

this tweet], favoriteCount [the number of

times this tweet was favorited], retweetCount [the

number of times this tweet was retweeted], and text [the

text content of this tweet].

The first

section of analysis determines the time span of the data. We

sort the tweets and look at the oldest [using head( )]

and newest [using tail( )] creation datatime

stamps. Once we are done exploring the data time span, we clean up

the R environment with the rm( ) function.

The

next section of analysis will explore the favorited tweets. These

are tweets that someone liked enough to designate them as a

"favorite". We start this analysis by tabulating the values in the

favoriteCount attribute of the Twitter data. The bar plot of the

tabulation results has a distinctive shape. It reveals that many

tweets were not favorited or favorited few times. The number of

tweets that were highly favorited are small, hence the long small

tail to the right of the chart. We will see this shape in several of

our other analyses. After plotting the favoriteCount frequency

tabulation, the analysis creates two data sets, one with the highest

favoriteCount and another with 0 favoriteCount. You can set the

count threshold for the high favoriteCount data based on the results

of the tabulation and the number of tweets you want in this data

set. The 0 favoriteCount dataset is trimmed to 30 rows. If you want

more rows, edit the command. The example code saves the two data

sets as .csv files for later analysis. Once

the data is saved into files, we clean up the R environment with the

rm( ) function.

The following section of analysis will explore the retweeted tweets. These are tweets that someone liked enough to share it as a retweet. We start this analysis by tabulating the values in the retweetCount attribute of the Twitter data. The bar plot of the tabulation results has a distinctive shape. It reveals that many tweets were not retweeted or retweeted few times. The number of tweets that were retweeted many times are small, hence the long small tail to the right of the chart. After plotting the retweetCount frequency tabulation, the analysis creates two data sets, one with the highest retweetCount and another with 0 retweetCount. You can set the count threshold for the high retweetCount data based on the results of the tabulation and the number of tweets you want in this data set. The 0 retweetCount dataset is trimmed to 30 rows. If you want more rows, edit the command. The example code saves the two data sets as .csv files for later analysis. Once the data is saved into files, we clean up the R environment with the rm( ) function.

The

final section provides an example of text mining the Twitter data

set. The first step of processing extracts the text content and

converts it to a data frame of individual words. Next the data frame

of words is converted to individual tokens [words reduced to common

roots, for example: dog and dogs will both become dog]. Stop words

[common words that do not convey meaning, like 'the', 'a', 'and',

'but', etc.] are removed leaving us the principal words in the tweet

text. The stop word processing can be modified to include other

words or terms of little interest such as 'professor' in our example

search phrase. You can do this by add your list of words to the

object stop_words using the c( ) R function.

The

example code now creates a bar chart of the most frequent word

tokens in the tweet text. You can adjust how many tokens are shown

in this chart by changing the filter( ) value. Notice that this

chart has the same shape as our previous frequency charts.

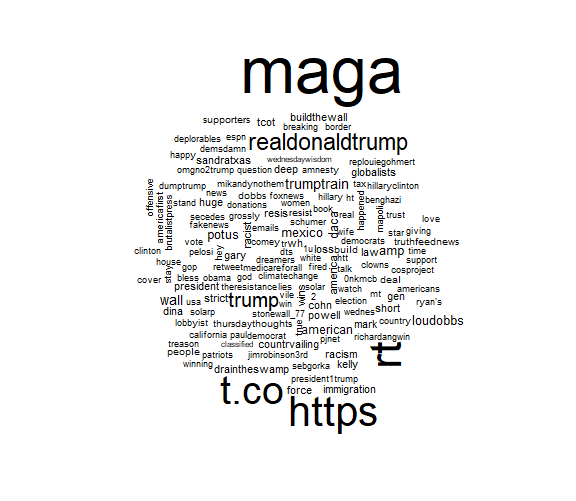

Finally,

the code creates a wordcloud of the 150 most frequent word tokens in

the tweet text. You can modify the number of words shown in the

worcloud to achieve a suitable image.

Here are dataset results [as .csv files] from the KEYS 4014 project, grouped by search term:

14words charts [as

.png files]: favoriteCount

frequency, retweetCount

frequency, tweet word

frequency, wordcloud

altright charts [as .png files]: favoriteCount frequency, retweetCount frequency, tweet word frequency, wordcloud

IdentityEuropa charts [as .png files]: favoriteCount frequency, retweetCount frequency, tweet word frequency, wordcloud

MAGA charts [as .png files]: favoriteCount frequency, retweetCount frequency, tweet word frequency, wordcloud

WhiteNationalism charts [as .png files]: favoriteCount frequency, retweetCount frequency, tweet word frequency, wordcloud